Spark Architecture

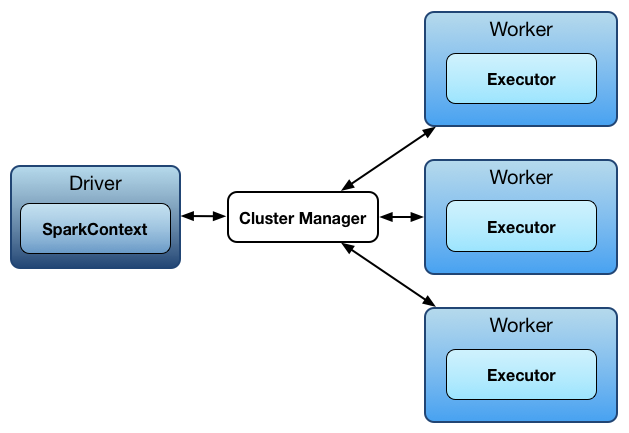

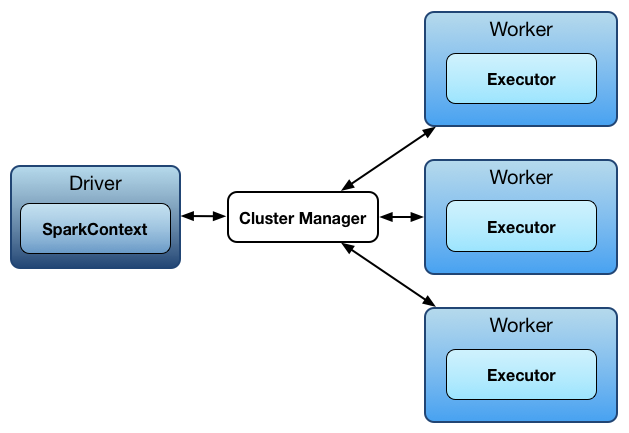

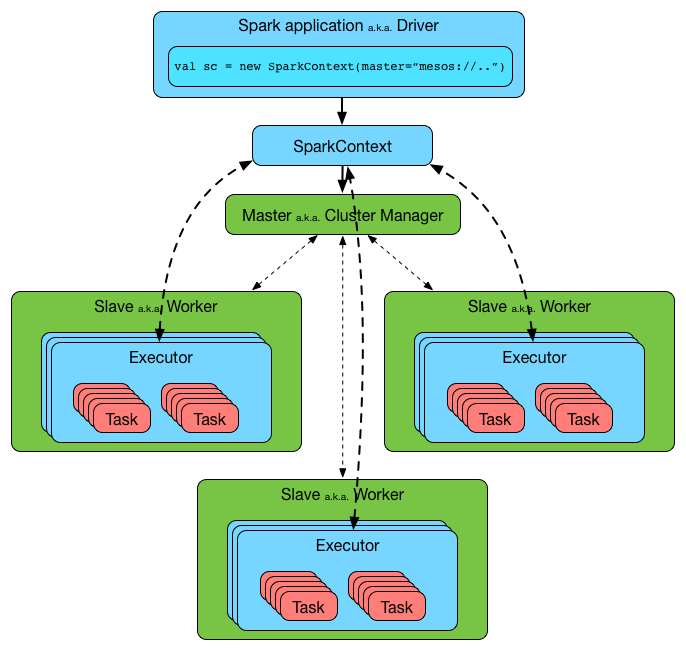

Spark uses a master/worker architecture. There is a driver that talks to a single coordinator called master that manages workers in which executors run.

Figure 1. Spark architecture

The driver and the executors run in their own Java processes. You can run them all on the same (horizontal cluster) or separate machines (vertical cluster) or in a mixed machine configuration.

Figure 2. Spark architecture in detail

Physical machines are called hosts or nodes.