val metricsSystem = SparkEnv.get.metricsSystemMetricsSystem

Spark uses Metrics 3.1.0 Java library to give you insight into the Spark subsystems (aka instances), e.g. DAGScheduler, BlockManager, Executor, ExecutorAllocationManager, ExternalShuffleService, etc.

|

Note

|

Metrics are only available for cluster modes, i.e. local mode turns metrics off.

|

| Subsystem Name | When created |

|---|---|

|

|

|

|

|

|

|

Spark Standalone’s |

|

Spark Standalone’s |

|

Spark Standalone’s |

|

Spark on Mesos' |

Subsystems access their MetricsSystem using SparkEnv.

|

Caution

|

FIXME Mention TaskContextImpl and Task.run |

org.apache.spark.metrics.source.Source is the top-level class for the metric registries in Spark. Sources expose their internal status.

Metrics System is available at http://localhost:4040/metrics/json/ (for the default setup of a Spark application).

$ http http://localhost:4040/metrics/json/

HTTP/1.1 200 OK

Cache-Control: no-cache, no-store, must-revalidate

Content-Length: 2200

Content-Type: text/json;charset=utf-8

Date: Sat, 25 Feb 2017 14:14:16 GMT

Server: Jetty(9.2.z-SNAPSHOT)

X-Frame-Options: SAMEORIGIN

{

"counters": {

"app-20170225151406-0000.driver.HiveExternalCatalog.fileCacheHits": {

"count": 0

},

"app-20170225151406-0000.driver.HiveExternalCatalog.filesDiscovered": {

"count": 0

},

"app-20170225151406-0000.driver.HiveExternalCatalog.hiveClientCalls": {

"count": 2

},

"app-20170225151406-0000.driver.HiveExternalCatalog.parallelListingJobCount": {

"count": 0

},

"app-20170225151406-0000.driver.HiveExternalCatalog.partitionsFetched": {

"count": 0

}

},

"gauges": {

...

"timers": {

"app-20170225151406-0000.driver.DAGScheduler.messageProcessingTime": {

"count": 0,

"duration_units": "milliseconds",

"m15_rate": 0.0,

"m1_rate": 0.0,

"m5_rate": 0.0,

"max": 0.0,

"mean": 0.0,

"mean_rate": 0.0,

"min": 0.0,

"p50": 0.0,

"p75": 0.0,

"p95": 0.0,

"p98": 0.0,

"p99": 0.0,

"p999": 0.0,

"rate_units": "calls/second",

"stddev": 0.0

}

},

"version": "3.0.0"

}|

Note

|

You can access a Spark subsystem’s MetricsSystem using its corresponding "leading" port, e.g. 4040 for the driver, 8080 for Spark Standalone’s master and applications.

|

|

Note

|

You have to use the trailing slash (/) to have the output.

|

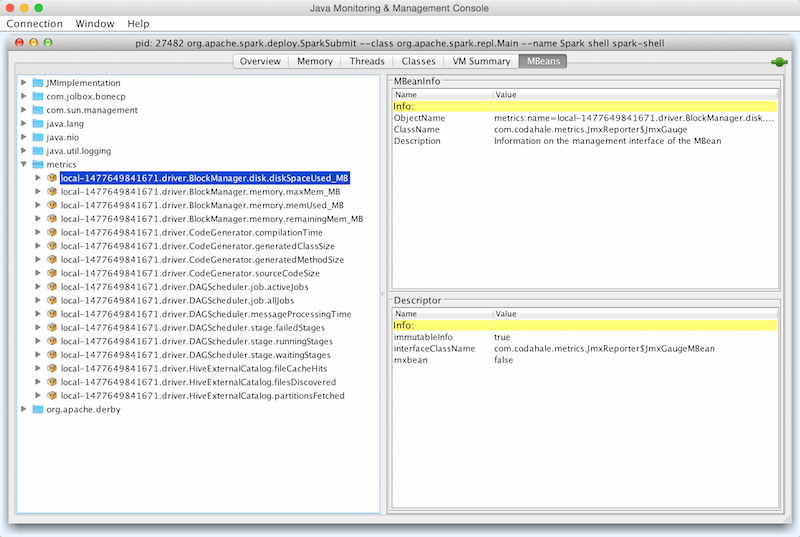

Enable org.apache.spark.metrics.sink.JmxSink in conf/metrics.properties and use jconsole to access Spark metrics through JMX.

*.sink.jmx.class=org.apache.spark.metrics.sink.JmxSink

| Name | Initial Value | Description |

|---|---|---|

Initialized when |

||

Flag whether |

||

(uninitialized) |

| Name | Description |

|---|---|

Metrics sinks in a Spark application. Used when |

|

Tip

|

Enable Add the following line to Refer to Logging. |

"Static" Metrics Sources for Spark SQL — StaticSources

|

Caution

|

FIXME |

registerSinks Internal Method

|

Caution

|

FIXME |

stop Method

|

Caution

|

FIXME |

removeSource Method

|

Caution

|

FIXME |

report Method

|

Caution

|

FIXME |

Master

$ http http://192.168.1.4:8080/metrics/master/json/path

HTTP/1.1 200 OK

Cache-Control: no-cache, no-store, must-revalidate

Content-Length: 207

Content-Type: text/json;charset=UTF-8

Server: Jetty(8.y.z-SNAPSHOT)

X-Frame-Options: SAMEORIGIN

{

"counters": {},

"gauges": {

"master.aliveWorkers": {

"value": 0

},

"master.apps": {

"value": 0

},

"master.waitingApps": {

"value": 0

},

"master.workers": {

"value": 0

}

},

"histograms": {},

"meters": {},

"timers": {},

"version": "3.0.0"

} Creating MetricsSystem Instance For Subsystem — createMetricsSystem Factory Method

createMetricsSystem(

instance: String,

conf: SparkConf,

securityMgr: SecurityManager): MetricsSystemcreateMetricsSystem creates a MetricsSystem.

|

Note

|

createMetricsSystem is used when subsystems create their MetricsSystems.

|

Creating MetricsSystem Instance

MetricsSystem takes the following when created:

MetricsSystem initializes the internal registries and counters.

When created, MetricsSystem requests MetricsConfig to initialize.

|

Note

|

createMetricsSystem is used to create MetricsSystems instead.

|

Registering Metrics Source — registerSource Method

registerSource(source: Source): UnitregisterSource adds source to sources internal registry.

registerSource creates an identifier for the metrics source and registers it with MetricRegistry.

|

Note

|

registerSource uses Metrics' MetricRegistry.register to register a metrics source under a given name.

|

When registerSource tries to register a name more than once, you should see the following INFO message in the logs:

INFO Metrics already registered|

Note

|

|

Building Metrics Source Identifier — buildRegistryName Method

buildRegistryName(source: Source): String|

Note

|

buildRegistryName is used to build the metrics source identifiers for a Spark application’s driver and executors, but also for other Spark framework’s components (e.g. Spark Standalone’s master and workers).

|

|

Note

|

buildRegistryName uses spark.metrics.namespace and spark.executor.id Spark properties to differentiate between a Spark application’s driver and executors, and the other Spark framework’s components.

|

(only when instance is driver or executor) buildRegistryName builds metrics source name that is made up of spark.metrics.namespace, spark.executor.id and the name of the source.

|

Note

|

buildRegistryName uses Metrics' MetricRegistry to build metrics source identifiers.

|

|

Caution

|

FIXME Finish for the other components. |

Starting MetricsSystem — start Method

start(): Unitstart turns running flag on.

|

Note

|

start can only be called once and reports an IllegalArgumentException otherwise.

|

start registers the "static" metrics sources for Spark SQL, i.e. CodegenMetrics and HiveCatalogMetrics.

start then registerSources followed by registerSinks.

In the end, start starts registered metrics sinks (from sinks registry).

|

Note

|

|

Registering Metrics Sources for Current Subsystem — registerSources Internal Method

registerSources(): UnitregisterSources finds metricsConfig configuration for the current subsystem (aka instance).

|

Note

|

instance is defined when MetricsSystem is created.

|

registerSources finds the configuration of all the metrics sources for the subsystem (as described with source. prefix).

For every metrics source, registerSources finds class property, creates an instance, and in the end registers it.

When registerSources fails, you should see the following ERROR message in the logs followed by the exception.

ERROR Source class [classPath] cannot be instantiated|

Note

|

registerSources is used exclusively when MetricsSystem is started.

|

Settings

| Spark Property | Default Value | Description |

|---|---|---|

Spark application’s ID (aka |

Root namespace for metrics reporting. Given a Spark application’s ID changes with every invocation of a Spark application, a custom Used when |